搭建高可用负载均衡器: haproxy+keepalived

企业业务量比较小的时候,单台服务器就可以满足业务需要了。但是随着业务发展,单服务器的问题就凸显出来了:

- 当服务器挂掉时,业务就会中断

- 当业务量增加,单台服务器性能变差,如何透明的扩展服务器和带宽,增加服务器吞吐量

负载均衡器可以解决以上问题

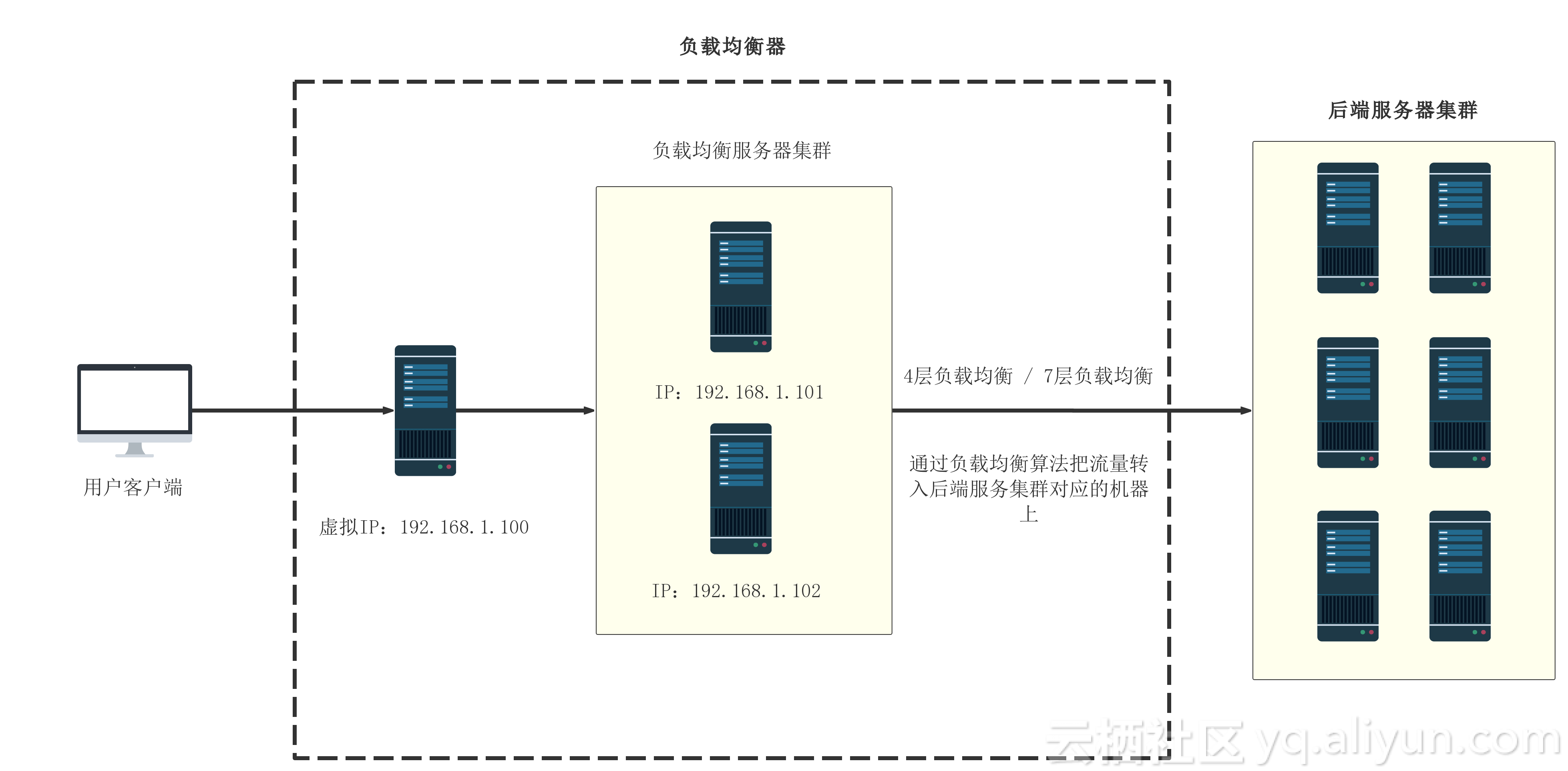

1 负载均衡器拓扑图

本文会根据拓扑图,用haproxy和keepalived搭建一个负载均衡器

2 准备

2.1 准备环境

准备5台CentOS7.3主机和一个VIP地址:

准备一个可用IP用作虚拟IP(VIP):

- VIP: 192.168.1.100

负载均衡器会用到2台主机,一主一备的架构

- lb1(默认为主): 192.168.1.101

- lb2(默认为备): 192.168.1.102

后端服务器集群中主机的IP地址

- s1: 192.168.1.2

- s2: 192.168.1.3

- s3: 192.168.1.4

2.2 主机配置

2.2.1 所有主机上关闭防火墙

systemctl stop firewalld

systemctl disable firewalld2.2.2 所有主机关闭selinux

setenforce 0

vi /etc/selinux/config

SELINUX=disabled2.3 安装haproxy和keepalived

lb1和lb2上安装haproxy和keepalived

yum install haproxy keepalived -y2.4 安装nginx(有其他后端测程序,可省略此步)

s1 s2 s3上安装nginx,目的是把nginx作为后端,如果有其他后端程序,这一步可以省略

yum install epel-release -y

yum install nginx -y2.3 配置keepalived

KeepAlived是基于VRRP(Virtual Router Redundancy Protocol,虚拟路由冗余协议)实现的一个高可用方案,通过VIP(虚拟IP)和心跳检测来实现高可用

Keepalived有两个角色,Master和Backup。一般会是1个Master,多个Backup。

Master会绑定VIP到自己网卡上,对外提供服务。Master和Backup会定时确定对方状态,当Master不可用的时候,Backup会通知网关,并把VIP绑定到自己的网卡上,实现服务不中断,高可用

2.3.1 配置Master

编辑lb1(192.168.1.101)上的/etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

# 通知邮件服务器的配置

notification_email {

# 当master失去VIP或则VIP的时候,会发一封通知邮件到your-email@qq.com

your-email@qq.com

}

# 发件人信息

notification_email_from keepalived@qq.com

# 邮件服务器地址

smtp_server 127.0.0.1

# 邮件服务器超时时间

smtp_connect_timeout 30

# 邮件TITLE

router_id LVS_DEVEL

}

vrrp_instance VI_1 {

# 主机: MASTER

# 备机: BACKUP

state MASTER

# 实例绑定的网卡, 用ip a命令查看网卡编号

interface eno16777984

# 虚拟路由标识,这个标识是一个数字(1-255),在一个VRRP实例中主备服务器ID必须一样

virtual_router_id 88

# 优先级,数字越大优先级越高,在一个实例中主服务器优先级要高于备服务器

priority 100

# 主备之间同步检查的时间间隔单位秒

advert_int 1

# 验证类型和密码

authentication {

# 验证类型有两种 PASS和HA

auth_type PASS

# 验证密码,在一个实例中主备密码保持一样

auth_pass 11111111

}

# 虚拟IP地址,可以有多个,每行一个

virtual_ipaddress {

192.168.1.100

}

}

virtual_server 192.168.1.100 443 {

# 健康检查时间间隔

delay_loop 6

# 调度算法

# Doc: http://www.keepalived.org/doc/scheduling_algorithms.html

# Round Robin (rr)

# Weighted Round Robin (wrr)

# Least Connection (lc)

# Weighted Least Connection (wlc)

# Locality-Based Least Connection (lblc)

# Locality-Based Least Connection with Replication (lblcr)

# Destination Hashing (dh)

# Source Hashing (sh)

# Shortest Expected Delay (seq)

# Never Queue (nq)

# Overflow-Connection (ovf)

lb_algo rr

lb_kind NAT

persistence_timeout 50

protocol TCP

# 通过调度算法把Master切换到真实的负载均衡服务器上

# 真实的主机会定期确定进行健康检查,如果MASTER不可用,则切换到备机上

real_server 192.168.1.101 443 {

weight 1

TCP_CHECK {

# 连接超端口

connect_port 443

# 连接超时时间

connect_timeout 3

}

}

real_server 192.168.1.102 443 {

weight 1

TCP_CHECK {

connect_port 443

connect_timeout 3

}

}

}2.3.2 配置BACKUP

编辑lb2(192.168.1.102)上的/etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

# 通知邮件服务器的配置

notification_email {

# 当master失去VIP或则VIP的时候,会发一封通知邮件到your-email@qq.com

your-email@qq.com

}

# 发件人信息

notification_email_from keepalived@qq.com

# 邮件服务器地址

smtp_server 127.0.0.1

# 邮件服务器超时时间

smtp_connect_timeout 30

# 邮件TITLE

router_id LVS_DEVEL

}

vrrp_instance VI_1 {

# 主机: MASTER

# 备机: BACKUP

state BACKUP

# 实例绑定的网卡, 用ip a命令查看网卡编号

interface eno16777984

# 虚拟路由标识,这个标识是一个数字(1-255),在一个VRRP实例中主备服务器ID必须一样

virtual_router_id 88

# 优先级,数字越大优先级越高,在一个实例中主服务器优先级要高于备服务器

priority 99

# 主备之间同步检查的时间间隔单位秒

advert_int 1

# 验证类型和密码

authentication {

# 验证类型有两种 PASS和HA

auth_type PASS

# 验证密码,在一个实例中主备密码保持一样

auth_pass 11111111

}

# 虚拟IP地址,可以有多个,每行一个

virtual_ipaddress {

192.168.1.100

}

}

virtual_server 192.168.1.100 443 {

# 健康检查时间间隔

delay_loop 6

# 调度算法

# Doc: http://www.keepalived.org/doc/scheduling_algorithms.html

# Round Robin (rr)

# Weighted Round Robin (wrr)

# Least Connection (lc)

# Weighted Least Connection (wlc)

# Locality-Based Least Connection (lblc)

# Locality-Based Least Connection with Replication (lblcr)

# Destination Hashing (dh)

# Source Hashing (sh)

# Shortest Expected Delay (seq)

# Never Queue (nq)

# Overflow-Connection (ovf)

lb_algo rr

lb_kind NAT

persistence_timeout 50

protocol TCP

# 通过调度算法把Master切换到真实的负载均衡服务器上

# 真实的主机会定期确定进行健康检查,如果MASTER不可用,则切换到备机上

real_server 192.168.1.101 443 {

weight 1

TCP_CHECK {

# 连接超端口

connect_port 443

# 连接超时时间

connect_timeout 3

}

}

real_server 192.168.1.102 443 {

weight 1

TCP_CHECK {

connect_port 443

connect_timeout 3

}

}

}2.4 配置haproxy

编辑lb1(192.168.1.101)和lb2(192.168.1.102)上的/etc/haproxy/haproxy.cfg

把后端服务器IP(192.168.1.2, 192.168.1.3, 192.168.1.4)加到backend里

#---------------------------------------------------------------------

# Global settings

#---------------------------------------------------------------------

global

log 127.0.0.1 local2

chroot /var/lib/haproxy

pidfile /var/run/haproxy.pid

maxconn 4096

user haproxy

group haproxy

daemon

# turn on stats unix socket

stats socket /var/lib/haproxy/stats

listen stats

bind *:9000

mode http

stats enable

stats hide-version

stats uri /stats

stats refresh 30s

stats realm Haproxy\ Statistics

stats auth admin:admin

frontend k8s-api

bind *:443

mode tcp

option tcplog

tcp-request inspect-delay 5s

tcp-request content accept if { req_ssl_hello_type 1 }

default_backend k8s-api-backend

backend k8s-api-backend

mode tcp

option tcplog

option tcp-check

balance roundrobin

server master1 192.167.1.2:80 maxconn 1024 weight 5 check

server master2 192.167.1.3:80 maxconn 1024 weight 5 check

server master3 192.167.1.4:80 maxconn 1024 weight 5 check2.5 配置nginx

给nginx添加SSL证书,配置过程略

vi /usr/share/nginx/html/index.html把index.html里面字符串Welcome to nginx改成Welcome to nginx HA

3 启动服务

3.1 启动nginx

sudo systemctl start nginx

sudo systemctl enable nginx3.2 启动haproxy

sudo systemctl start haproxy

sudo systemctl enable haproxy3.3 启动keepalived

sudo systemctl start keepalived

sudo systemctl enable keepalived在MASTER上运行ip a

eno16777984: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP qlen 1000

link/ether 00:xx:xx:xx:3d:0c brd ff:ff:ff:ff:ff:ff

inet 192.168.1.101/24 brd 192.168.1.255 scope global eno16777984

valid_lft forever preferred_lft forever

inet 192.168.1.100/32 scope global eno16777984

valid_lft forever preferred_lft forever

inet6 eeee:eeee:1c9d:2009:250:56ff:fe9c:3d0c/64 scope global noprefixroute dynamic

valid_lft 7171sec preferred_lft 7171sec

inet6 eeee::250:56ff:eeee:3d0c/64 scope link

valid_lft forever preferred_lft forever会发现VIP(192.168.1.100)已经绑定好了

inet 192.168.1.100/32 scope global eno16777984

valid_lft forever preferred_lft forever如果发现VIP无法绑定

vi /etc/sysctl.conf添加两行

net.ipv4.ip_forward = 1

net.ipv4.ip_nonlocal_bind = 1让新配置生效

sysctl -p4 验证

4.1 查看状态

1. 在浏览器输入 http://192.168.1.100:9000/stats 查看haproxy状态

2. 在浏览器输入 https://192.168.1.100 查看服务状态

是否成功显示为nginx欢迎页面4.2 主备切换

1. 在浏览器输入 https://192.168.1.100 查看是否成功显示nginx欢迎页面

2. lb1(192.168.1.101)关机,查看是否还可以访问https://192.168.1.100, 如果成功,则说明VIP成功切换到备机

3. 在lb2(192.168.1.102)上执行ip a,查看网卡是否绑定VIP(192.168.1.100)

3. 启动lb1(192.168.1.101)

目的是为了验证VIP是否切回MASTER主机(因为MASTER端的配置文件中priority为100,而BACKUP为99,health check会自动把VIP绑定到priority高的主机上)转载自 https://yq.aliyun.com/articles/609851?spm=a2c4e.11153940.bloghomeflow.104.417a291abDIzgA

版权声明:

作者:超级管理员

链接:

https://apecloud.ltd/article/detail.html?id=72

来源:猿码云个人技术站

文章版权归作者所有,未经允许请勿转载。

共有0条评论